AI’s hype and promise in cybersecurity is balanced by trepidation and risk. Here’s where to focus.

- Gartner client? Log in for personalized search results.

Cybersecurity and AI: Enabling Security While Managing Risk

For CISOs, the challenge lies in turning AI disruption into opportunity

Generative AI (GenAI) promises to revolutionise cybersecurity, but initial attempts to fully automate complex security tasks have often been disappointing.

Vendors also offer only a limited glimpse of GenAI's potential, so cybersecurity leaders must explore the potential and risk for themselves.

Download Gartner’s cybersecurity and AI predictions to:

Access data-driven insights and actionable guidance

Prepare for the future of GenAI

Equip your team to tackle upcoming challenges and seize opportunities

AI’s impact on cybersecurity and the CISO

To address the various impacts of AI on cybersecurity, CISOs must first understand the hype, the risks and the potential.

- AI Hype in Cybersecurity

- AI Cybersecurity Risks

- AI Cybersecurity Potential

Don’t expect an AI holy grail

GenAI’s initial hype tantalised many organisations into rushing in without much forethought. Such lack of planning is risky, because the reward rarely matches the hype.

Often what follows are months of trial and error followed by a retroactive assessment, financial write-off and sometimes a sacrificial executive departure (depending on the size of the write-off). The larger impact comes later in the form of opportunities lost with the delayed rollout of generative capabilities.

The GenAI hype is bound to create disillusion in the short term as external pressures to increase security operation productivity collide with low-maturity features and fragmented workflows.

Watch out for common symptoms of an ill-prepared GenAI integration:

Using “improved productivity” as a key metric, because there’s a lack of actual metrics to measure GenAI benefits and business impact — plus, premium prices for GenAI add-ons

Difficulty integrating AI assistants in collaboration workflows in the security operation teams or with a security operation provider

“Prompt fatigue” — too many tools offering interactive interface to query about threats and incidents

To counteract the distortion that comes with overblown AI claims, conduct roadmap planning. Factor in all possibilities, balancing cybersecurity realities with GenAI hopes:

Take a multiyear approach. Start with application security and security operations, then progressively integrate GenAI offerings when they augment security workflows.

Ask “Is it worth it?” Set expectations for your investment and measure yourself against those targets. Evaluate efficiency gains along with costs. Refine detection and productivity metrics to account for new GenAI cybersecurity features.

Prioritise AI augmentation of the workforce (not just task automation). Plan for changes in long-term skill requirements due to GenAI.

Account for privacy challenges and balance the expected benefits with the risks of adopting GenAI in security.

Prepare your best defense

GenAI is just the latest in a series of technologies that have promised huge boosts in productivity fueled by automation of tasks. Past attempts to fully automate complex security activities have rarely been successful and can be a wasteful distraction.

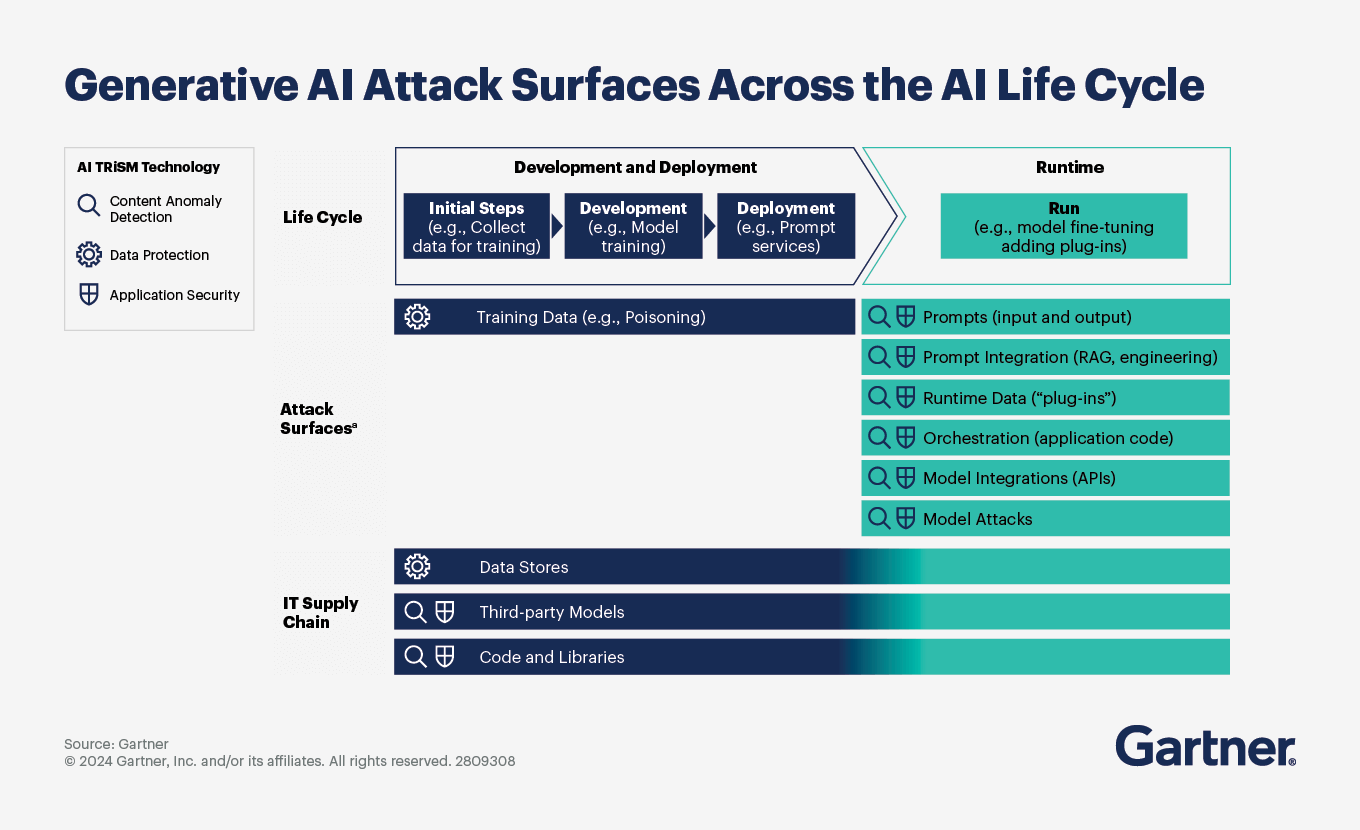

Although there are benefits to using GenAI models and third-party large language models (LLMs), there are also unique user risks that require new security practices. These fall into three categories:

Content anomaly detection

Hallucinations or inaccurate, illegal, copyright-infringing and unwanted or unintended outputs that compromise decision making or cause brand damage

Unacceptable or malicious use

Unmanaged enterprise content transmitted through prompts, compromising confidential data inputs

Data protection

Data leakage, integrity and compromised confidentiality of content and user data in hosted vendor environment

Inability to govern privacy and data protection policies in externally hosted environments

Difficulty conducting privacy impact assessments and complying with regional regulations due to the black-box nature of third-party models

The fact that once raw data is put into a model, there is no easy way of removing it, short of rebuilding the model, which is not practical and extremely expensive

AI application security

Adversarial prompting attacks, including business logic abuses and direct and indirect prompt injections

Vector database attacks

Hacker access to model states and parameters

Externally hosted LLMs and other GenAI models increase these risks, as enterprises cannot directly control their application processes and data handling and storage. However, there is also risk in on-premises models hosted by the enterprise — especially when security and risk controls are lacking. These three categories of risk confront users during runtime of AI applications and models.

Opt for AI augments

Ironically, the conversational interface that brought GenAI into the spotlight is the culprit behind GenAI’s heightened risk of failure for internal enterprise use cases. There are two reasons for this increased risk of failure:

Usage and ROI. ROI depends on your employees using and benefiting from the technology. There’s no guarantee that employees will use a conversational interface. Without a clear value proposition, employees will not integrate GenAI into their daily workflows. Demonstrating benefit is an uphill battle as all you can track is usage; the rest is left to the user.

Prompts and hallucinations. Different users ask the same question in different ways and may receive just as many different answers. The quality of their prompts affects the quality of the answers, which may be hallucinations. Organisations must train employees on prompting, support them when they receive errant answers and invest in prompt preprocessing and response postprocessing to mitigate these issues.

To combat these roadblocks, support cybersecurity practitioners through prebuilt prompts based on their observed activity in a specific task, instead of expecting them to stop what they are doing to ask a question.

Use generative augments (GAs): add-ons like plugins or extensions built on top of host applications to monitor the user. GAs take observed data, along with data from other systems, and incorporate it with a preprogrammed set of prompts that guides users as they perform tasks. The prompts query an LLM and receive responses in a predefined format, making it easier to mitigate hallucinations. The logic within the augment validates each response before passing it back to the user. There is no conversational/chat interface (UI) available.

Experience IT Security and Risk Management conferences

Join your peers for the unveiling of the latest insights at Gartner conferences.

Related cybersecurity and AI resources

Gartner clients: Log in for a complete suite of actionable insights and tools on cybersecurity and AI.

FAQ on cybersecurity and AI

What is AI in cybersecurity?

AI (artificial intelligence) in cybersecurity refers to the application of AI technologies and techniques to enhance the security of computer systems, networks and data to protect from potential threats and attacks. AI enables cybersecurity systems to analyze vast amounts of data, identify patterns, detect anomalies and make intelligent decisions in real time to prevent, detect and respond to cyberthreats.

How is AI disrupting and transforming cybersecurity?

AI is revolutionising cybersecurity by improving threat detection, automating security operations, enhancing user authentication and providing advanced analytics capabilities.

What are the key considerations for integrating AI into existing cybersecurity infrastructure?

When integrating AI into existing cybersecurity infrastructure, there are several key considerations to keep in mind:

Data availability and quality

Compatibility and integration

Scalability and performance

Explainability and transparency

Human–machine collaboration

Ethical and legal considerations

Training and skill development

Vendor selection and expertise

Risk assessment and mitigation